Let’s say our application needs to find all users within 1 km of an existing user. How long can we expect this to take?

The performance of this query is clearly going to depend on how many users we have and how they’re distributed. There’s no better way to estimate query performance than to run our test queries on test data sets of varying sizes. But all test data is not created equal. Two easy pitfalls are:

- Too few test points. If your test dataset is too small, you may mask the effect of a missing spatial index, for example.

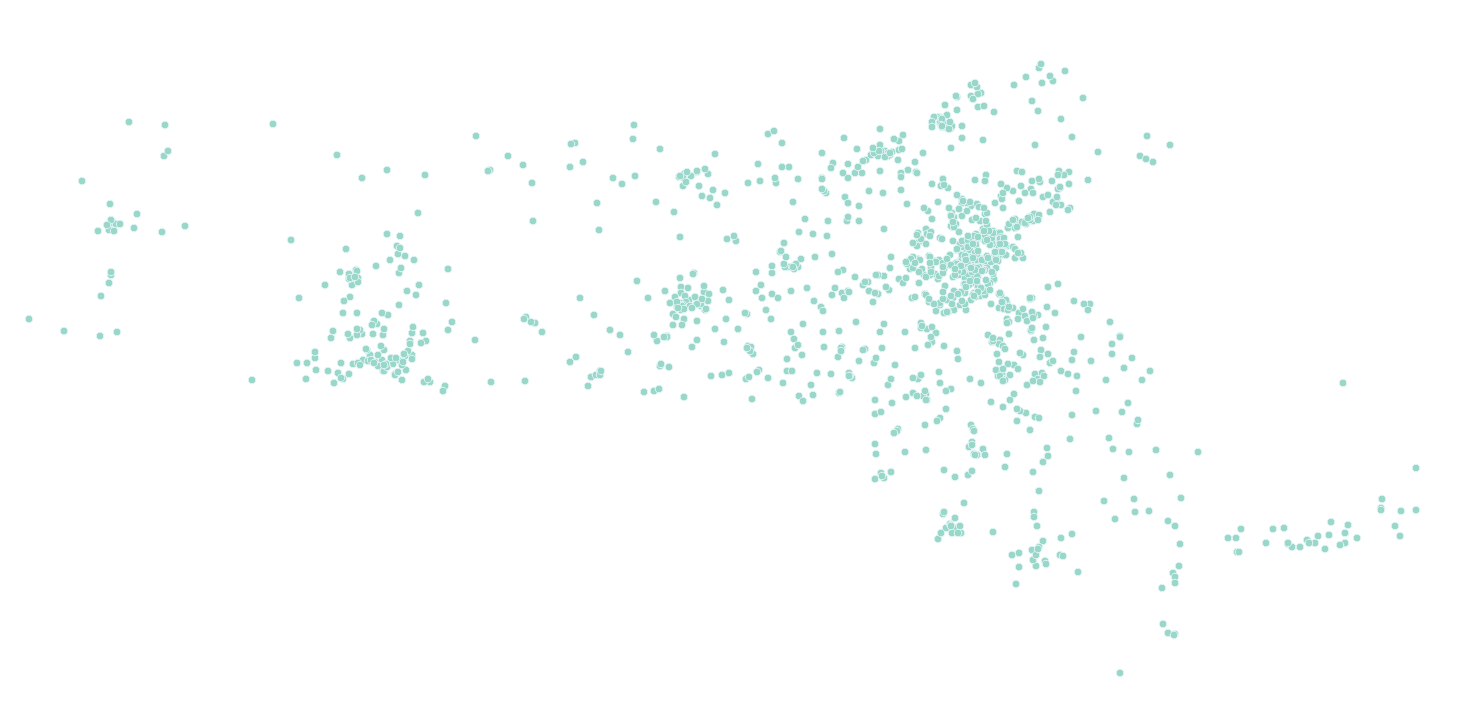

- Unrealistically distributed test points. If you try to get enough data by stuffing a bunch of points on a grid, or stacked on top of each other, your test query runtimes won’t reflect the range you can expect to see under real usage. With real data your query will be slower for a user in Manhattan than a user in Wyoming, and you want to see this variability in your testing.

This post will show a simple method to generate test user data, using a new feature of PostGIS 2.3.

We’ll be generating our test users based on US Census data. The smallest geographic unit for which Census data is available is the block, and TIGER handily provides a set of Census block shapefiles with the 2010 population directly appended as a field. These files are available at this link.

For this example, I’ll use Massachusetts. To start, we’ll fetch the file:

wget http://www2.census.gov/geo/tiger/TIGER2010BLKPOPHU/tabblock2010_25_pophu.zipUnzip it to a temp directory:

unzip tabblock2010_25_pophu.zip -d /tmp/blocksAnd load it to Postgres:

shp2pgsql -D -I -s 4269 /tmp/blocks/tabblock2010_25_pophu.shp | psql postgresAmong the many gems quietly introduced in PostGIS 2.3 is ST_GeneratePoints. It generates a specified number of random points within a polygon. We can use this to generate a point for each person that resides in each block. ST_GeneratePoints is going to produce a MultiPoint, so we’ll follow up with ST_Dump to get one row per person.

1

2

3

4

5

6

7

8

9

10

11

12

CREATE TABLE users AS

SELECT

row_number() OVER() AS user_id,

geom::geography AS geog

FROM

(SELECT

(ST_Dump(ST_GeneratePoints(geom, pop10 * 0.03))).geom

FROM tabblock2010_25_pophu

) sq;

ALTER TABLE users ADD PRIMARY KEY (user_id);

CREATE INDEX ON users USING GIST(geog);

We’re assuming that 3% of the population is using our service. On my laptop, this takes 7 seconds to generate about 195,000 points.

If you’re not sure what sample percentage to use, or if you want to take multiple samples of different sizes, you could create a table with 100% of the population and quickly subset it using the Postgres TABLESAMPLE command. So our command to get that 3% sample would be:

1

2

3

CREATE TABLE users_subset AS

SELECT * FROM users

TABLESAMPLE BERNOULLI (3);

Both of these methods are fast and simple ways to generate a realistic set of test data.